Thoughts on the Apple Vision Pro

While I haven’t used the Apple Vision Pro yet, I do have some initial thoughts on 1. the way Apple presented it (and what it means for VR & AR), and 2. potential applications in Digital / UX / Consulting work.

On AR/VR

Incredible confidence in their real-time operating system’s (RTOS) stability and latency to take what is, effectively, a VR headset with video pass through but present it as an AR experience by default (“here’s your room and walls, except with more computing in it”) – some thoughts on the AR/VR distinction:

This is the opposite of what Meta is presenting (“you can be anywhere and do anything”) but with a functionally equivalent tech stack – Meta’s headsets could also “put your in your room” with “more computing in it”

In practise, the upshot may be that Apple’s approach is so quantitatively superior technically (in the axes of passthrough speed, resolution, accuracy / intuitiveness of control system) that the sum of the parts is a qualitatively differentiated end-user experience

Fascinating contrast with iPhone, or really the iPod, whose success was more due to a qualitatively superior experience (basically, due to software) as a moat around products made from off-the-shelf parts that were quantitatively similar to competitive mp3 players

Time will tell, but Apple’s approach may end up being the minimum viable quality for a screen-mediated AR experience (or, perhaps, any AR experience) – i.e. it’s the 1 that could one day go to 10 or 100

In other words, Meta isn’t even off the starting block in this particular race, and neither is Microsoft Hololens

Benedict Evans writes intelligently here on this

Whether AR experiences will be a bigger market than VR experiences is an open question

At the same time it does appear clear that “screen-mediated AR” is probably a better execution of AR than the approach that Microsoft Hololens is taking, to “light up” virtual components through a transparent display layer

On its applications

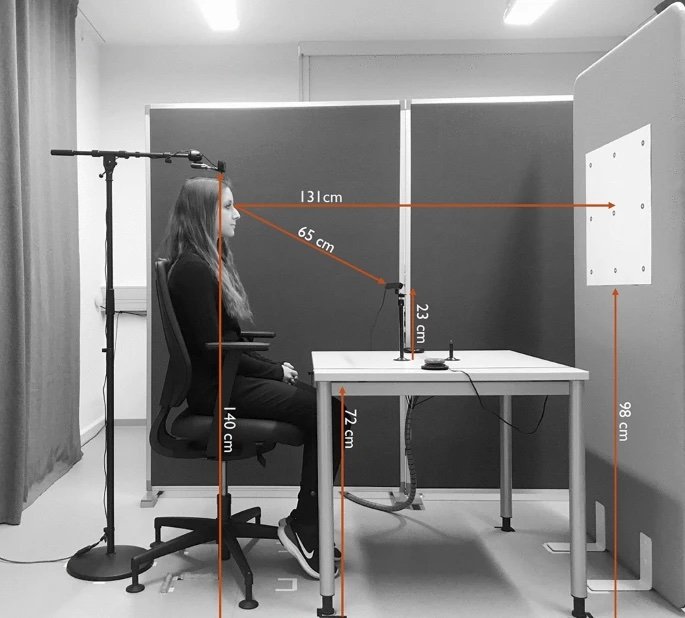

UX researchers will have a field day if/when Apple releases the SDK that allows access to the eye-tracking!

What once required rental of specialised testing rooms, eye-tracking cameras and specialised analytics could potentially be obviated with $3,500, and a bunch of telematics to be piped straight out of a iOS fork..

Interesting to consider spatial computing in a collaborative professional services context (i.e. digital version of gathering around a meeting room whiteboard with stickies and markers) - more ecosystem pieces floating around in plain sight?

Working together in Miro or Mural is fine, but this has the potential to be much more intuitive and immersive (you can’t exactly slack off browsing Twitter if you’re “present” in a virtual collaboration space) - and Apple already has a collaborative diagramming / whiteboard tool built in to its OS’ – Freeform app